Download the code for this post here: http://static.mikehadlow.com/Mike.Vs2010Play.zip

I finally got around to downloading the Visual Studio 2010 beta 2.0 last weekend. One of the first things I wanted to play with was the new covariant collection types. These allow you to treat collections of a sub-type as collections of their super-type, so you can write stuff like:

IEnumerable<Cat> cats = CreateSomeCats(); IEnumerable<Animal> animals = cats;

My current client is the UK Pension’s Regulator. They have an interesting, but not uncommon, domain modelling issue. They fundamentally deal with pension schemes of which there are two distinct types: defined contribution (DC) schemes, where you contribute a negotiated amount, but the amount you get when you retire is entirely dependent on the mercy of the markets; and defined benefit (DB) schemes, where you get a negotiated amount no matter what the performance of the scheme’s investments. Needless to say, a DB scheme is the one you want :)

To model this they have an IScheme interface with implementations for the two different kinds of scheme. Obvious really.

Now, they need to know far more about the employers providing DB schemes than they do about those that offer DC schemes, so they have a IEmployer interface that defines the common stuff, and then different subclasses for DB and DC employers. The model looks something like this:

Often you want to treat schemes polymorphically; iterating through a collection of schemes and then iterating through their employers. With C# 3.0 this is a tricky one to model. IScheme can have a property ‘Employers’ of type IEnumerable<IEmployer>, but you have to do some ugly item-by-item casting in order to convert from the internal IEnumerable<specific-employee-type>. You can’t then use the same Employers property in the specific case when you want to do some DB only operation on DB employers, instead you have to provide another ‘DbEmployers’ property of type IEnumerable<DefinedBenefitEmployer> or have the client do more nasty item-by-item casting.

But with C# 4.0 and covariant type parameters this can be modelled very nicely. First we have a scheme interface

using System.Collections.Generic; namespace Mike.Vs2010Play { public interface IScheme<out T> where T : IEmployer { IEnumerable<T> Employers { get; } } }

Note that the generic argument T is prefixed with the ‘out’ keyword. This tells the compiler that we only want to use T as an output value. The compiler will now allow us to cast from an IScheme<DefinedBenefitEmployer>to an IScheme<IEmployer>.

Let’s look at the implementation of DefinedBenefitScheme:

using System.Collections.Generic; namespace Mike.Vs2010Play { public class DefinedBenefitScheme : IScheme<DefinedBenefitEmployer> { List<DefinedBenefitEmployer> employers = new List<DefinedBenefitEmployer>(); public IEnumerable<DefinedBenefitEmployer> Employers { get { return employers; } } public DefinedBenefitScheme WithEmployer(DefinedBenefitEmployer employer) { employers.Add(employer); return this; } } }

We can see that the ‘Employers’ property can now be defined as IEnumerable<DefinedBenefitEmployer> so we get DB employers when we are dealing with a DB scheme. But when we cast it to an IScheme<IEmployer>, the Employers property is cast to IEnumerable<IEmployer>.

It’s worth noting that we can’t define ‘WithEmployer’ as ‘WithEmployer(T employer)’ on the IScheme interface. If we try doing this we’ll get a compile time error saying that ‘T is not contravariant’, or something along those lines. That’s because T in this case is an input parameter, and we have explicitly stated on IScheme that T will only be used for output. In any case it would make no sense for WithEmployer to be polymorphic; we deliberately want to limit DB schemes to DB employers.

Let’s look at an example. We’ll create both a DB and a DC scheme. First we’ll do some operation with the DB scheme that requires us to iterate over its employers and get DB employer specific information, then we’ll treat both schemes polymorphically to get the names of all employers.

public void DemonstrateCovariance() { // we can create a defined benefit scheme with specialised employers var definedBenefitScheme = new DefinedBenefitScheme() .WithEmployer(new DefinedBenefitEmployer { Name = "Widgets Ltd", TotalValueOfAssets = 12345M }) .WithEmployer(new DefinedBenefitEmployer { Name = "Gadgets Ltd", TotalValueOfAssets = 56789M }); // we can treat the DB scheme normally outputting its specialised employers Console.WriteLine("Assets for DB schemes:"); foreach (var employer in definedBenefitScheme.Employers) { Console.WriteLine("Total Value of Assets: {0}", employer.TotalValueOfAssets); } // we can create a defined contribution scheme with its specialised employers var definedContributionScheme = new DefinedContributionScheme() .WithEmployer(new DefinedContributionEmployer { Name = "Tools Ltd" }) .WithEmployer(new DefinedContributionEmployer { Name = "Fools Ltd" }); // with covariance we can also treat the schemes polymorphically var schemes = new IScheme<IEmployer>[]{ definedBenefitScheme, definedContributionScheme }; // we can also treat the scheme's employers polymorphically var employerNames = schemes.SelectMany(scheme => scheme.Employers).Select(employer => employer.Name); Console.WriteLine("\r\nNames of all emloyers:"); foreach(var name in employerNames) { Console.WriteLine(name); } }

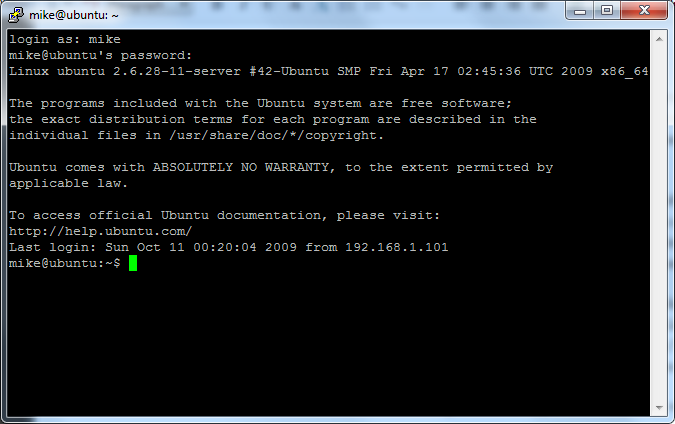

When we run this, we get the following output:

Assets for DB schemes: Total Value of Assets: 12345 Total Value of Assets: 56789 Names of all employers: Widgets Ltd Gadgets Ltd Tools Ltd Fools Ltd

It’s worth checking out co and contra variance, why it’s important and how it can help you. Eric Lippert has a great series of blog posts with all the details:

Covariance and Contravariance in C#, Part One

Covariance and Contravariance in C#, Part Two: Array Covariance

Covariance and Contravariance in C#, Part Three: Method Group Conversion Variance

Covariance and Contravariance in C#, Part Four: Real Delegate Variance

Covariance and Contravariance In C#, Part Five: Higher Order Functions Hurt My Brain

Covariance and Contravariance in C#, Part Six: Interface Variance

Covariance and Contravariance in C# Part Seven: Why Do We Need A Syntax At All?

Covariance and Contravariance in C#, Part Eight: Syntax Options

Covariance and Contravariance in C#, Part Nine: Breaking Changes

Covariance and Contravariance in C#, Part Ten: Dealing With Ambiguity

Covariance and Contravariance, Part Eleven: To infinity, but not beyond

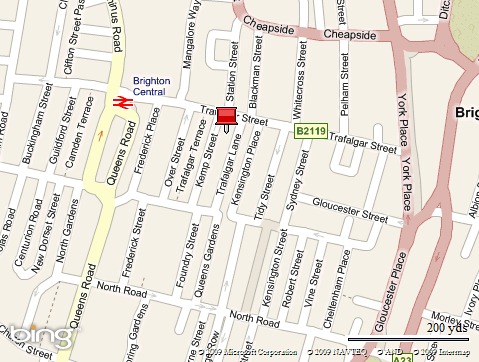

A month ago I started a new reading regime where I get up an hour earlier and head off to a café for an hour’s reading before work. It’s a very nice arrangement, since I seem to be in the perfect state of mind for a bit of technical reading first thing in the morning, and an hour is just about the right length of time to absorb stuff before my brain starts to hit overload.

A month ago I started a new reading regime where I get up an hour earlier and head off to a café for an hour’s reading before work. It’s a very nice arrangement, since I seem to be in the perfect state of mind for a bit of technical reading first thing in the morning, and an hour is just about the right length of time to absorb stuff before my brain starts to hit overload.